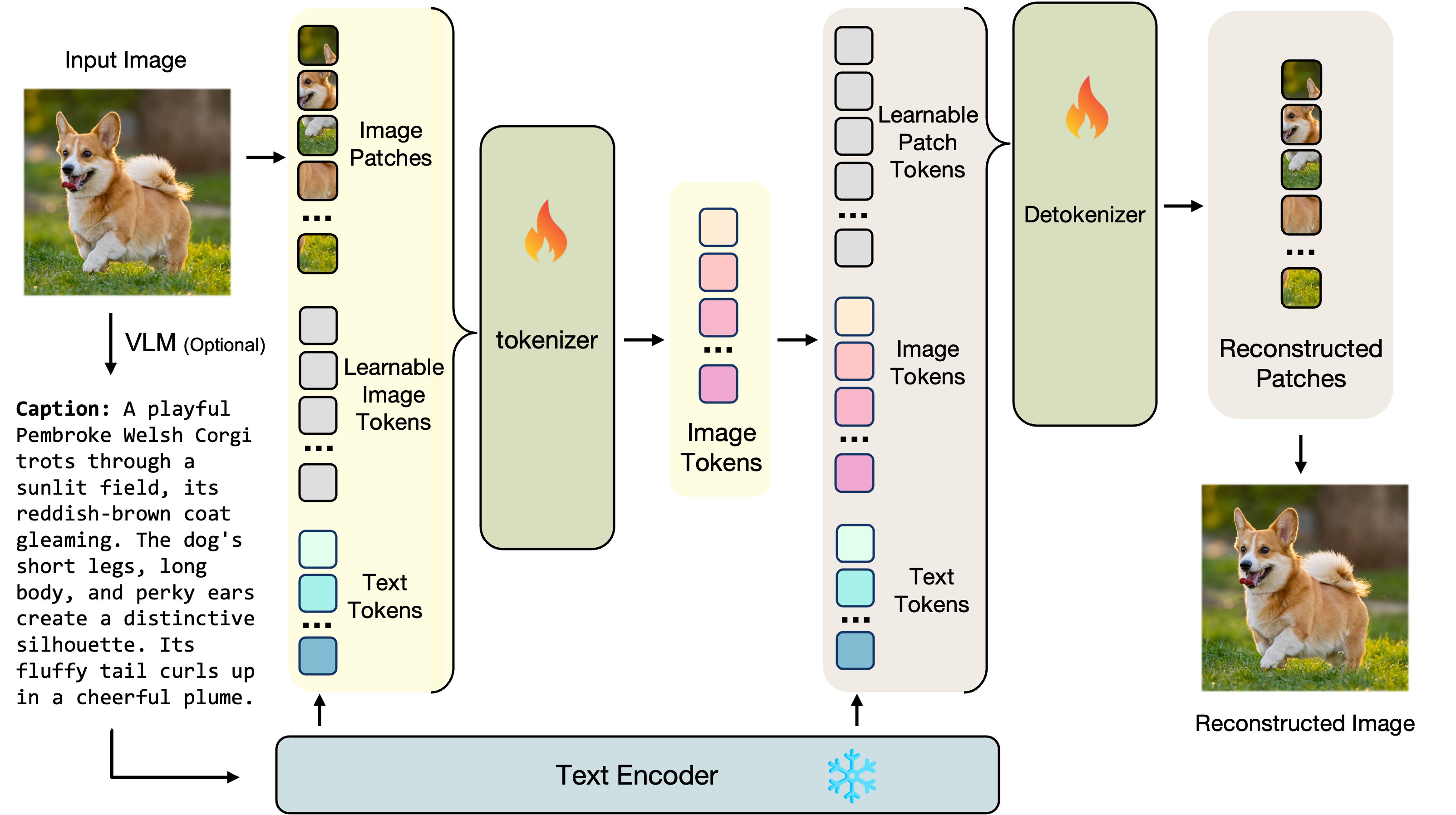

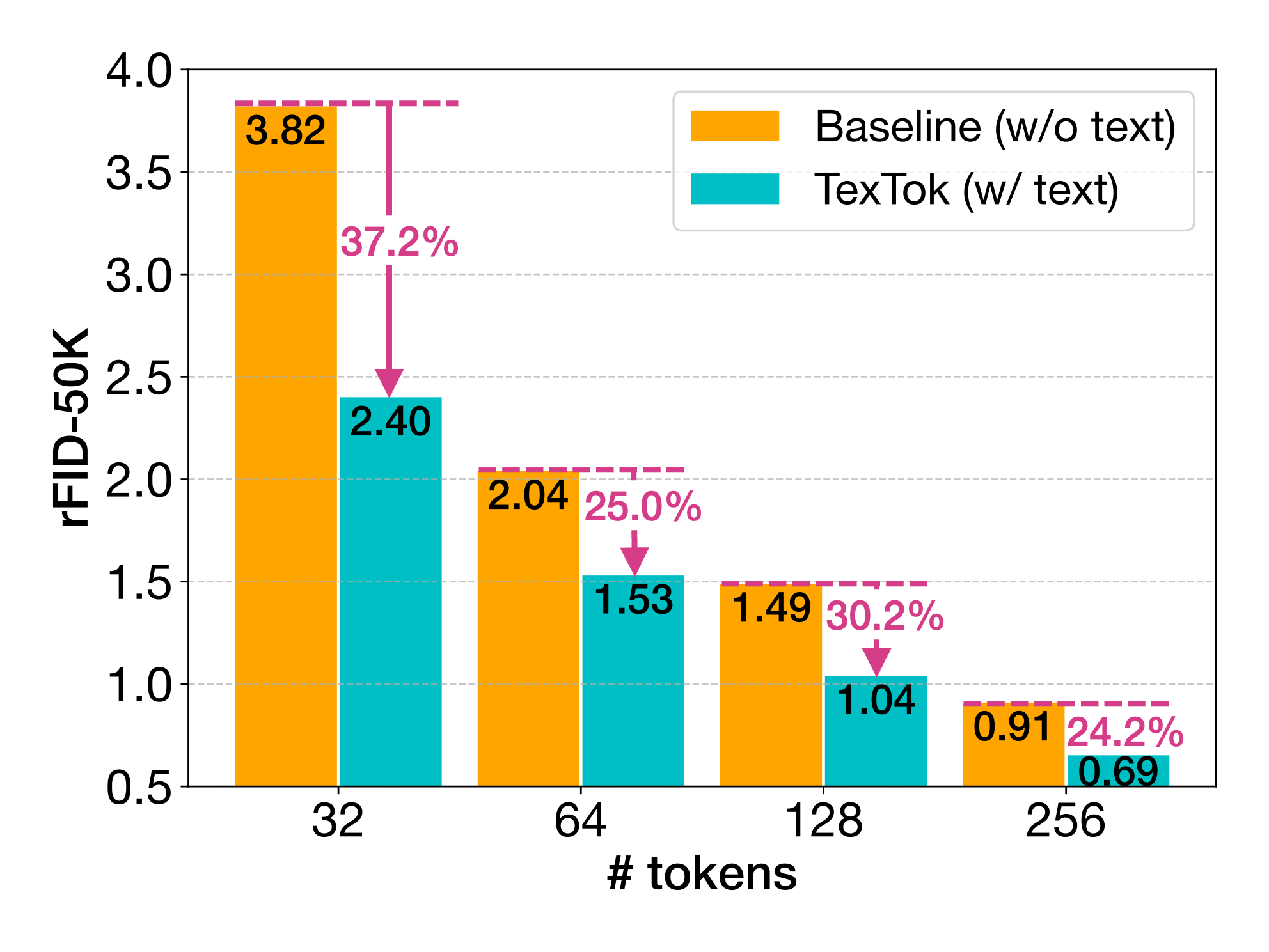

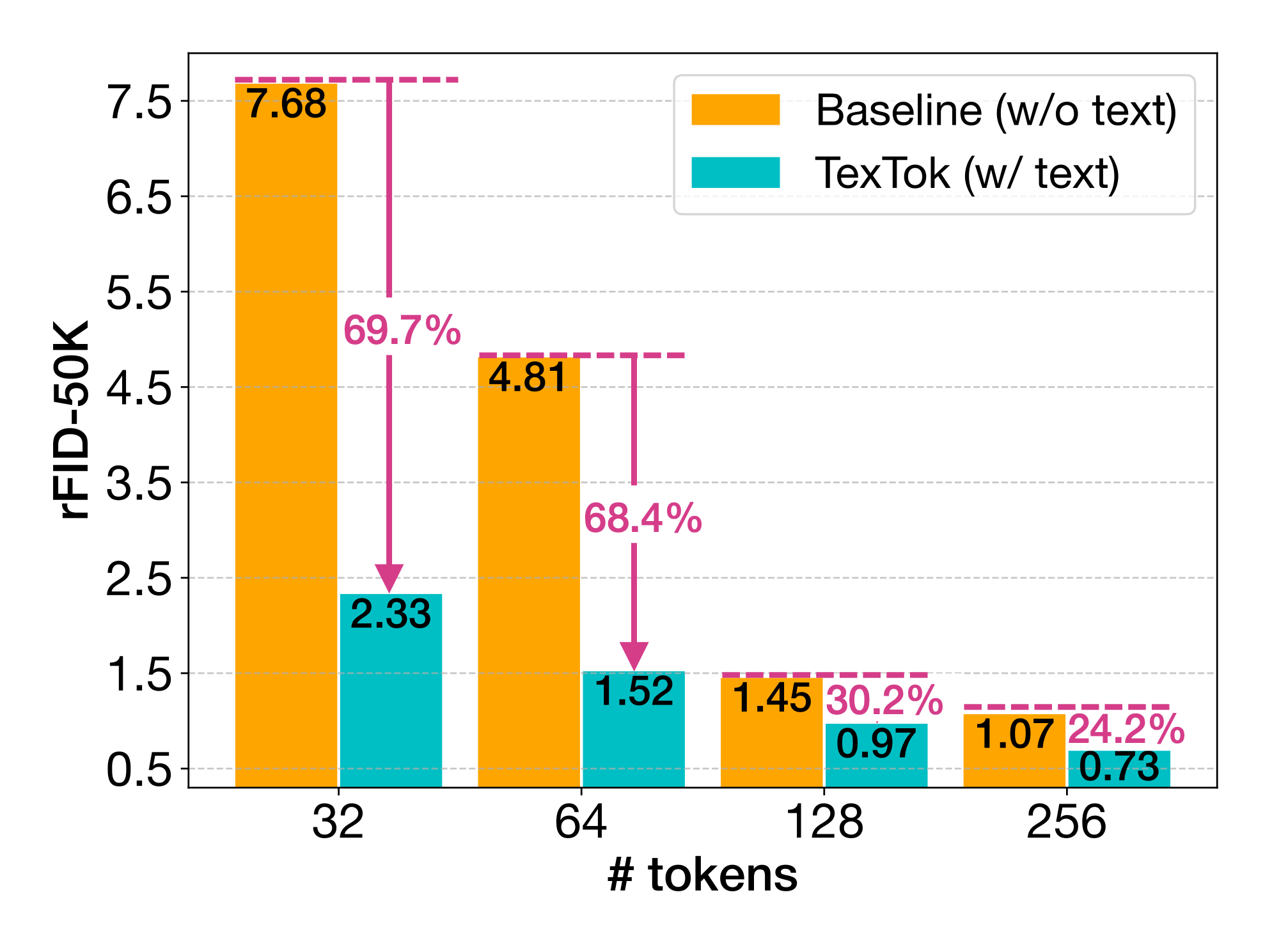

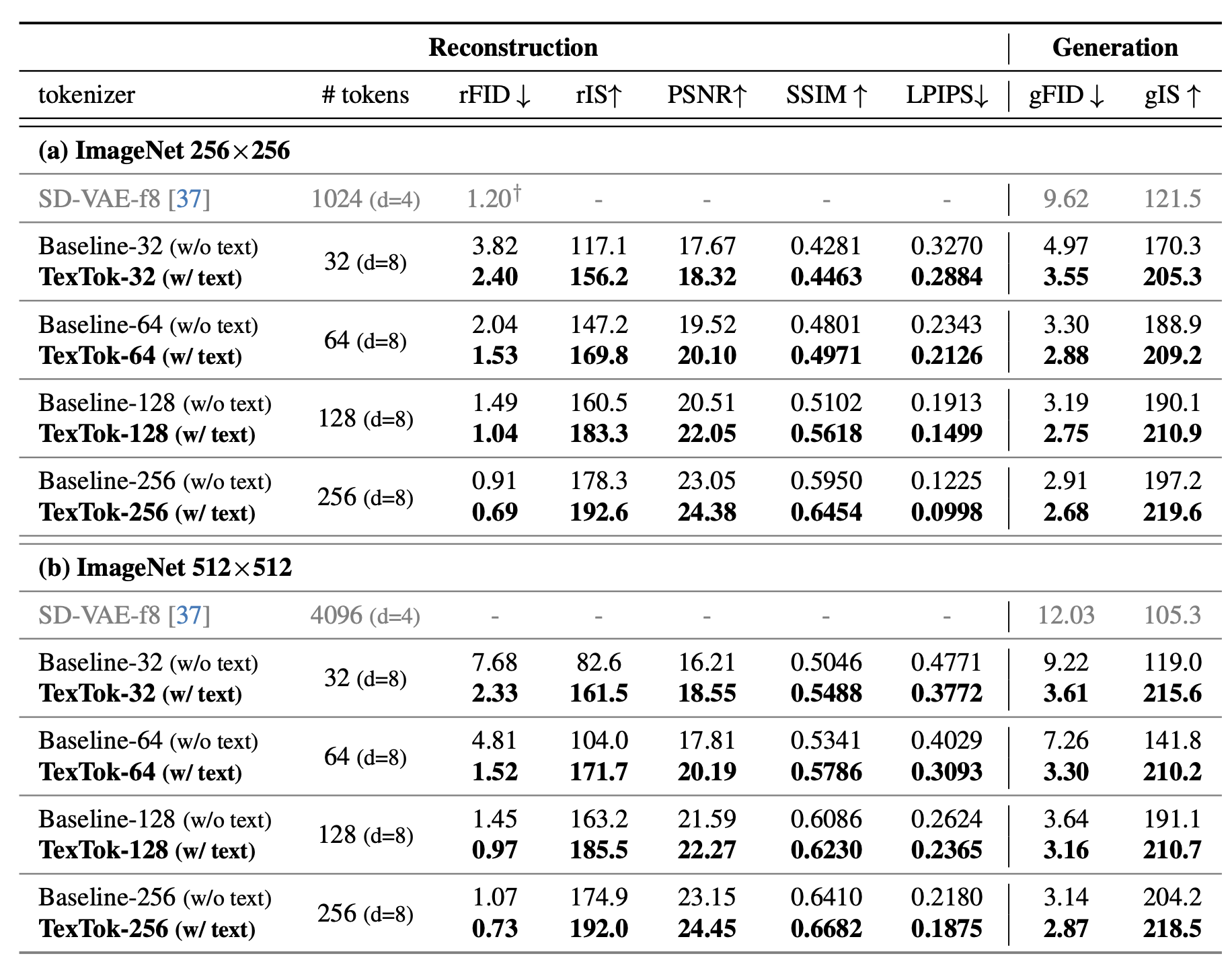

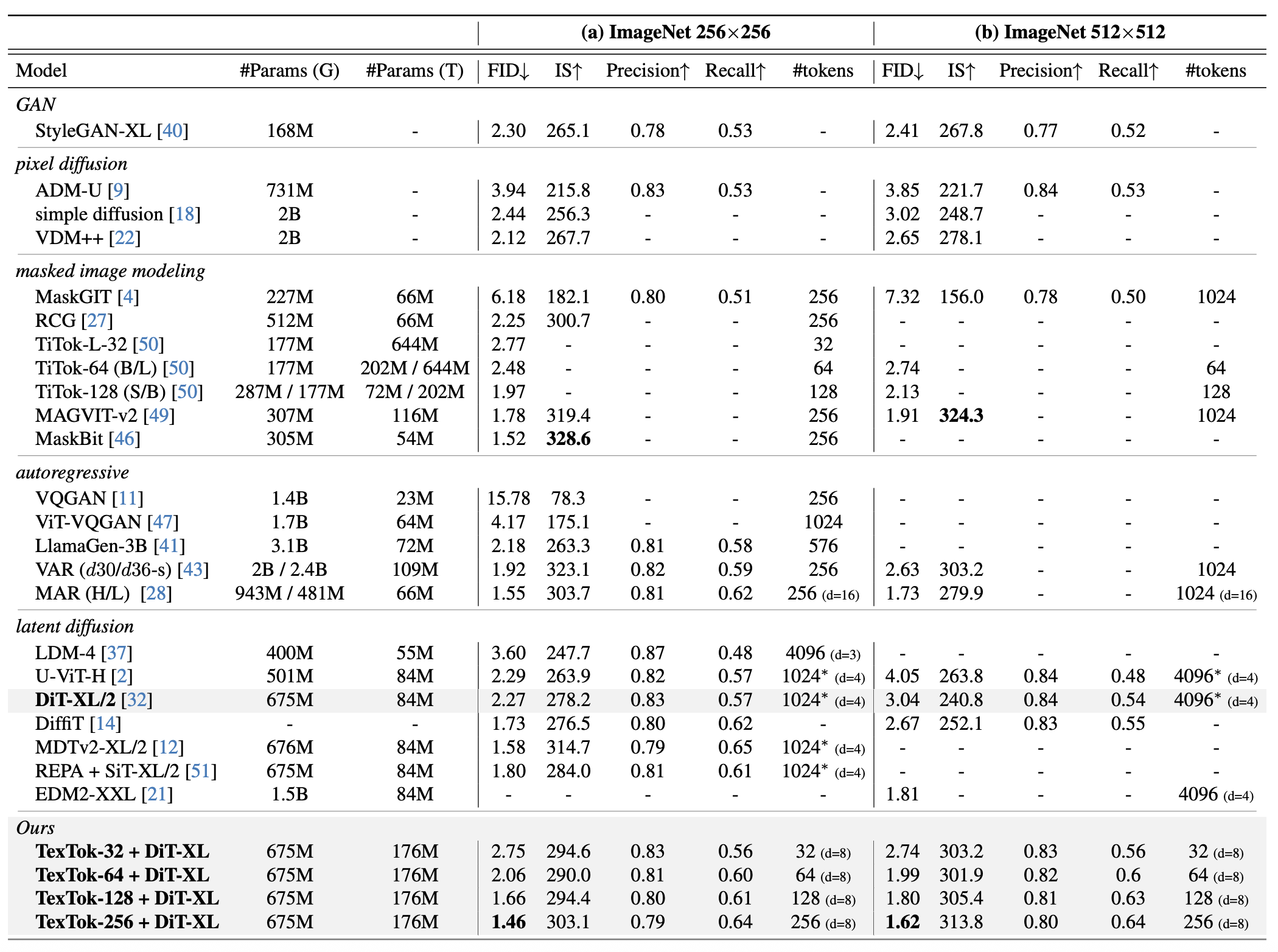

Image tokenization, the process of transforming raw image pixels into a compact low-dimensional latent representation, has proven crucial for scalable and efficient image generation. However, mainstream image tokenization methods generally have limited compression rates, making high-resolution image generation computationally expensive. To address this challenge, we propose to leverage language for efficient image tokenization, and we call our method Text-Conditioned Image Tokenization (TexTok). TexTok is a simple yet effective tokenization framework that leverages language to provide a compact, high-level semantic representation. By conditioning the tokenization process on descriptive text captions, TexTok simplifies semantic learning, allowing more learning capacity and token space to be allocated to capture fine-grained visual details, leading to enhanced reconstruction quality and higher compression rates. Compared to the conventional tokenizer without text conditioning, TexTok achieves average reconstruction FID improvements of 29.2% and 48.1% on ImageNet-256 and -512 benchmarks respectively, across varying numbers of tokens. These tokenization improvements consistently translate to 16.3% and 34.3% average improvements in generation FID. By simply replacing the tokenizer in Diffusion Transformer (DiT) with TexTok, our system can achieve a 93.5× inference speedup while still outperforming the original DiT using only 32 tokens on ImageNet-512. TexTok with a vanilla DiT generator achieves state-of-the-art FID scores of 1.46 and 1.62 on ImageNet-256 and -512 respectively. Furthermore, we demonstrate TexTok's superiority on the text-to-image generation task, effectively utilizing the off-the-shelf text captions in tokenization.

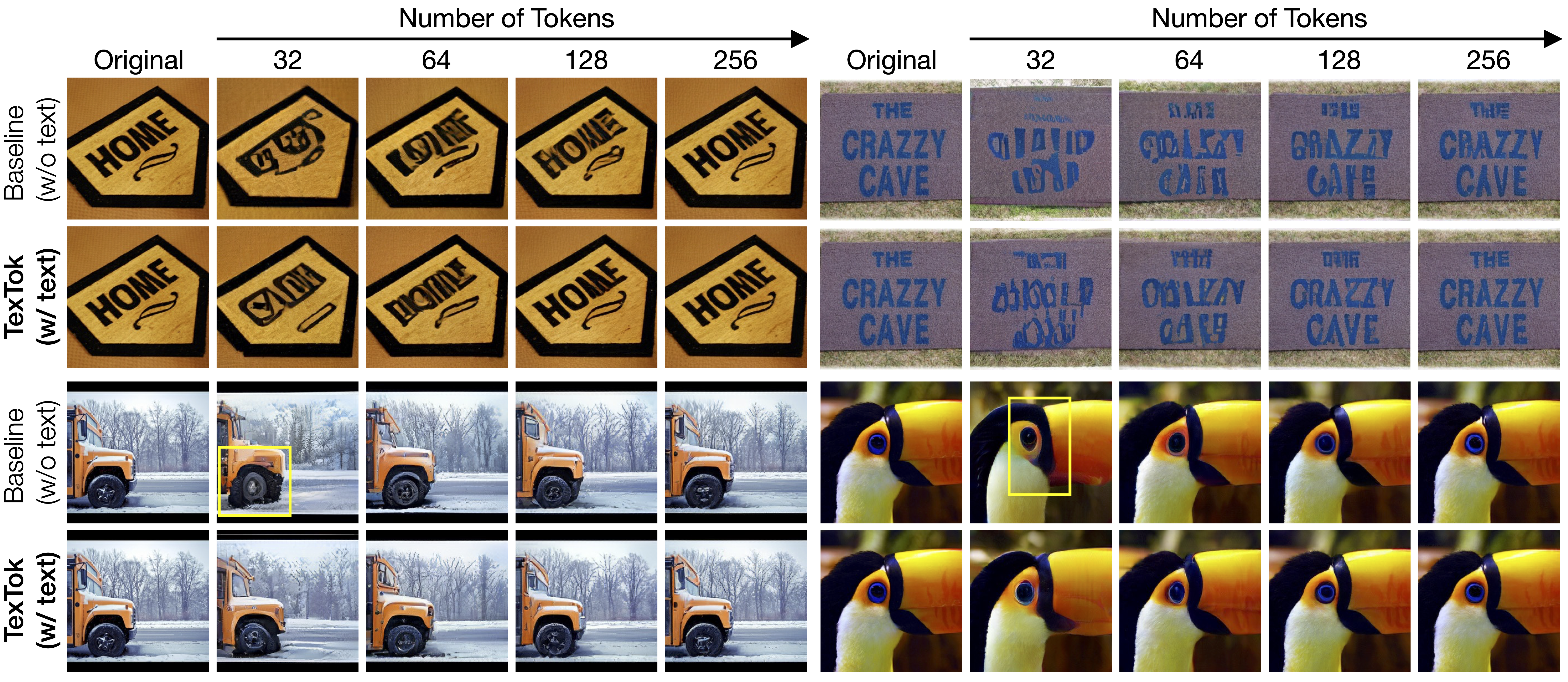

High compression rate: Low cost, but bad quality.

Low compression rate: Good quality, but high cost.

Can we achieve the best of both worlds, i.e., low cost and high quality?

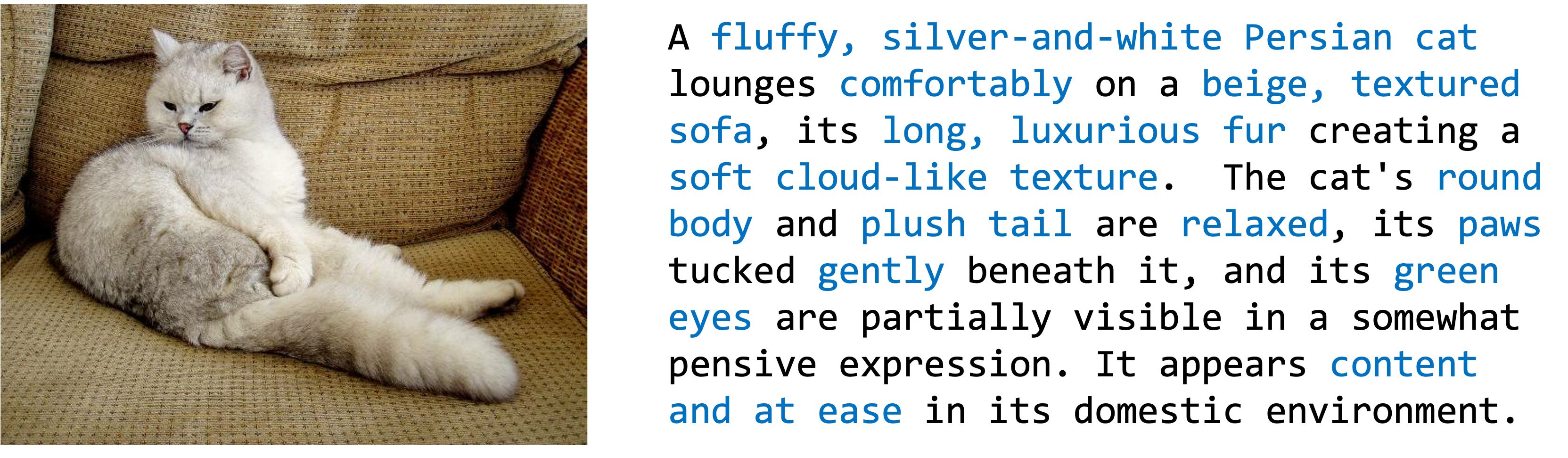

The most compact and comprehensive representation of an image is its caption.

By providing the text caption to the tokenizer, the tokenizer can:

ImageNet 256x256

ImageNet 512x512

ImageNet 256x256

ImageNet 512x512

@inproceedings{zha2025language,

title={Language-guided image tokenization for generation},

author={Zha, Kaiwen and Yu, Lijun and Fathi, Alireza and Ross, David A and Schmid, Cordelia and Katabi, Dina and Gu, Xiuye},

booktitle={Proceedings of the Computer Vision and Pattern Recognition Conference},

pages={15713--15722},

year={2025}

}